The Shibboleth IdP V3 software has reached its End of Life and is no longer supported. This documentation is available for historical purposes only. See the IDP4 wiki space for current documentation on the supported version.

Metadata Early Warning System

This article describes a proof-of-concept implementation of a metadata early warning system designed to work in conjunction with a Shibboleth FileBackedHTTPMetadataProvider.

Contents

The main component of the implementation is a specific metadata filter (md_require_timestamps.bash) written in bash. The filter ensures that all of the following conditions are true:

The top-level element of the metadata file is decorated with a

@validUntilattributeThe top-level element of the metadata file has an

md:Extensions/mdrpi:PublicationInfochild element (which necessarily has a@creationInstantattribute)The actual length of the validity interval (in metadata) does not exceed a given maximum length

In other words, the filter is a superset of the Shibboleth RequiredValidUntil metadata filter. Like the RequiredValidUntil filter, the bash filter rejects metadata that never expires or for which the validity interval is too long (both of which undermine the usual trust model). In addition, the filter ensures that the metadata is associated with a @creationInstant attribute. This important feature allows the filter to warn if the metadata is stale, long before the metadata expires.

As a side effect, the filter persists the values of the @creationInstant and @validUntil attributes to a log file. It then converts a portion of the log file to JSON. Here is the simplest example of a JSON array with one element:

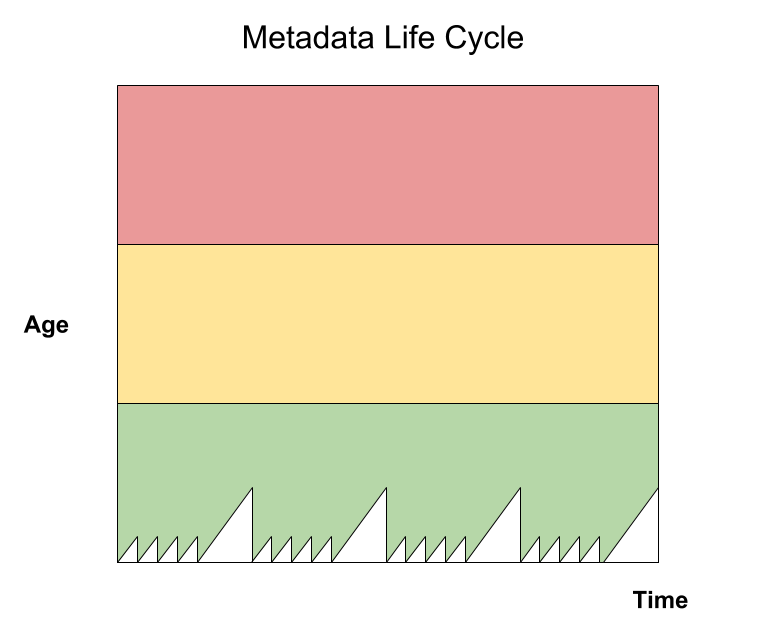

The data in the JSON file are sufficient to construct a time-series plot. For example:

The life cycle depicted above suggests that a fresh metadata file is signed on business days (M–F) only. Over the weekend, the age of the metadata spikes upward as expected.

Getting Started

Download and install the following projects from GitHub:

- The bash-library project

- The saml-library project

The following subsections outline the installation process.

Download the source code

git installed on your client machine, you can download bash-library.zip and saml-library.zip instead.Installing the Bash Library

First download the bash-library source code. If you have git installed, you can clone the repository as follows:

$ git clone https://github.com/trscavo/bash-library.git

Now install the source into /tmp like this:

$ export BIN_DIR=/tmp/bin $ export LIB_DIR=/tmp/lib $ ./bash-library/install.sh $BIN_DIR $LIB_DIR

or install the source into your home directory:

$ export BIN_DIR=$HOME/bin $ export LIB_DIR=$HOME/lib $ ./bash-library/install.sh $BIN_DIR $LIB_DIR

Either way, a given target directory (BIN_DIR or LIB_DIR) will be created if one doesn't already exist.

Installing the SAML Library

Next download the saml-library source code by cloning the repository:

$ git clone https://github.com/trscavo/saml-library.git

Install the source on top of the previous installation:

$ ./saml-library/install.sh $BIN_DIR $LIB_DIR

Besides BIN_DIR and LIB_DIR, a few additional environment variables are needed.

Configuring the Bash Environment

Assuming your OS defines TMPDIR, the following environment variables will suffice:

export CACHE_DIR=/tmp/http_cache export LOG_FILE=/tmp/bash_log.txt

Some OSes define TMPDIR and some do not. In any case, a temporary directory by that name is required to use these scripts.

Leveraging a FileBackedHTTPMetadataProvider

The rest of this article assumes you have configured a FileBackedHTTPMetadataProvider in the Shibboleth IdP. The backing file will be used as a source of (trusted) metadata:

$ idp_home=/path/to/idp/home/

$ backing_file="${idp_home%%/}/metadata/federation-metadata.xml"

The metadata configured in the FileBackedHTTPMetadataProvider need not be distributed by a federation but it turns out that federation metadata typically has the desired properties:

The metadata file is signed by the registrar

The top-level element of the metadata file is decorated with a

@validUntilattributeThe top-level element of the metadata file is associated with a

@creationInstantattribute (i.e., it has anmd:Extensions/mdrpi:PublicationInfochild element)

In particular, a federation that participates in eduGAIN necessarily supports the @creationInstant attribute (since eduGAIN requires it).

Configuring the Filters

Federations publish metadata files with Validity Intervals of various lengths. For the sake of illustration, let’s assume the actual Validity Interval in metadata is two weeks (which is in fact quite common):

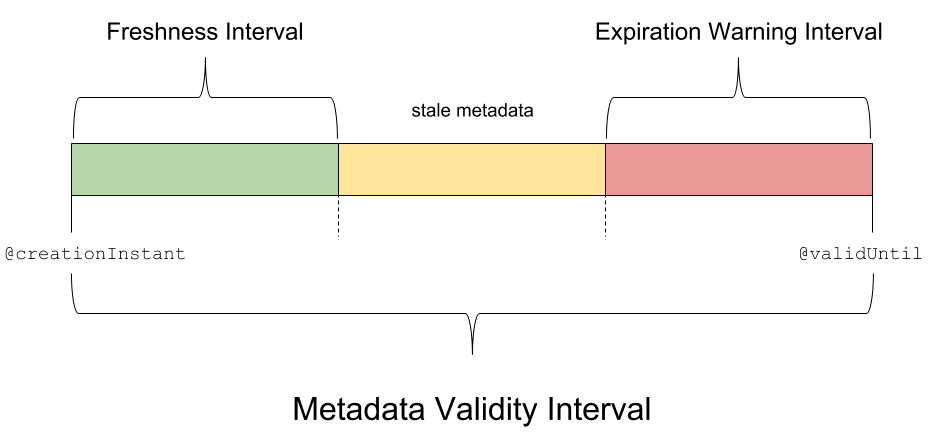

$ maxValidityInterval=P14D

As described in the story referenced at the beginning of this article, to conceptualize the metadata early warning system, we divide the Validity Interval into three subintervals: the Freshness Interval (bounded on the left by the @creationInstant attribute), the Expiration Warning Interval (bounded on the right by the @validUntil attribute), and a no-name subinterval sandwiched in the middle. In effect, the @creationInstant and @validUntil attributes partition the Validity Interval into GREEN, YELLOW, and RED subintervals, respectively.

The partition is determined by the lengths of the Freshness Interval and the Expiration Warning Interval. The choice of subinterval lengths depends on the signing frequency of federation metadata. If we assume the federation publishes fresh metadata at least once every business day, the following subinterval lengths make sense (but YMMV):

$ expirationWarningInterval=P3D $ freshnessInterval=P5D

Pipelining the Metadata

With that, let’s process the metadata in the backing file:

$ /bin/cat $backing_file \

| $BIN_DIR/md_require_valid_metadata.bash -E $expirationWarningInterval -F $freshnessInterval \

| $BIN_DIR/md_require_timestamps.bash -M $maxValidityInterval \

| $BIN_DIR/md_parse.bash \

| /usr/bin/tail -n 2

creationInstant 2018-03-29T19:02:46Z

validUntil 2018-04-12T19:02:46Z

Note that there are two metadata filters configured in the above pipeline. The first filter (md_require_valid_metadata.bash) forces the metadata to be valid while the second filter (md_require_timestamps.bash) requires the timestamps to be present. This is not unlike what the Shibboleth IdP does when you nest a RequiredValidUntil metadata filter inside a metadata provider.

Monitor the log file in real time

tail -f $LOG_FILE’. In the other window, execute the above command. Adjust the LOG_LEVEL environment variable as needed. For example, to invoke DEBUG logging throughout, type ‘export LOG_LEVEL=4’ into the command window. Alternatively, apply the -D option to any (or all) of the metadata filters in the pipeline.Yes the Shibboleth IdP ensures that the metadata is valid, and it will even warn you (optionally) if the metadata is soon-to-be-expired, but the IdP is not aware of the @creationInstant attribute and therefore it has no notion of a Freshness Interval. OTOH, the early warning system implemented above does all of the following:

Requires the

@validUntilattribute to exist and ensures that its value is in the future but not too far into the futureRequires the

@creationInstantattribute to exist and ensures that its value is in the past- Warns if the metadata is soon-to-be-expired

- Warns if the metadata is stale (but not soon-to-be-expired)

The last step is the essence of the early warning system.

Now try the following experiments:

Set

maxValidityIntervalto something less than the actual length of the Validity Interval and watch the process fail: an error message will be logged and the metadata will be removed from the pipeline.Set

maxValidityIntervalto something more than the actual length of the Validity Interval and watch the process fail: a warning message will be logged.Assuming the actual Validity Interval is 14 days, set the subintervals to overlapping values (say,

-E P3D -F P12D) and watch the process fail: a warning message will be logged.Set the

freshnessIntervalto some ridiculously small value (say,-F PT60S) and watch the process fail: a warning message will be logged.Set the

expirationWarningIntervalto some ridiculously large value (say,-E P13D -F PT60S) and watch the process fail: a warning message will be logged.

When you've confirmed that the early warning system is behaving as expected, continue with the following configuration steps.

Persisting the Timestamps

Now let’s modify the above command slightly so that the values of the @creationInstant and @validUntil attributes are persisted to a log file. For illustration, we’ll configure a log file in the /tmp directory:

$ timestamp_log_file=/tmp/log.txt $ touch $timestamp_log_file

With the log file in place, the following command is but a slight variation of the previous command:

$ /bin/cat $backing_file \

| $BIN_DIR/md_require_valid_metadata.bash -E $expirationWarningInterval -F $freshnessInterval \

| $BIN_DIR/md_require_timestamps.bash -M $maxValidityInterval $timestamp_log_file \

| $BIN_DIR/md_parse.bash \

| /usr/bin/tail -n 2

creationInstant 2018-03-29T19:02:46Z

validUntil 2018-04-12T19:02:46Z

$ /bin/cat $timestamp_log_file

2018-03-30T15:14:21Z 2018-03-29T19:02:46Z 2018-04-12T19:02:46Z

Every time you execute the above command, a line is appended to the log file.

Creating a JSON File

At last we are ready to convert (a portion of) the log file to JSON format. Typically the JSON file will be written to a web directory, but for illustration purposes, let’s write the output in the /tmp directory:

$ out_file=/tmp/out.txt

There’s no need to create the output file ahead of time since it is overwritten with a fresh JSON file every time the following command is executed:

$ /bin/cat $backing_file \

| $BIN_DIR/md_require_valid_metadata.bash -E $expirationWarningInterval -F $freshnessInterval \

| $BIN_DIR/md_require_timestamps.bash -M $maxValidityInterval $timestamp_log_file $out_file \

> /dev/null

By default, the JSON array will have 10 elements. To specify some other array size, add option -n to the metadata filter:

$ /bin/cat $backing_file \

| $BIN_DIR/md_require_valid_metadata.bash -E $expirationWarningInterval -F $freshnessInterval \

| $BIN_DIR/md_require_timestamps.bash -M $maxValidityInterval -n 30 $timestamp_log_file $out_file \

> /dev/null

The above command will output a JSON array of at most 30 elements. These elements correspond to the last 30 lines in the log file.

That’s it! To keep the JSON file up to date, you can of course automate the previous process with cron.